Rumored RTX Refresh - Memory Clocks?

Published:

by:

Jack 'NavJack27' Mangano

Estimated reading time: ~4 minutes

Please throw some money my way either directly VIA PayPal or with my Ko-Fi maybe don’t do my Patreon until I fully set it up.

There’s a rumor floating around that Nvidia might release an updated 2080 Ti with a tiny bit more cores and way faster memory clocks. I decided to do some memory scaling benchmarks to figure out what Nvidia might be able to squeeze from this silicon stone.

The Tweets

These are some of the tweets that sparked off my reasoning to do the testing.

Perhaps the new dies that no longer have the A/non-A moniker? Or maybe it is the rumoured refresh with 16Gbps GDDR6

— Charlie (@ghost_motley) May 10, 2019

There have been rumours NVIDIA will refresh the 2080 Ti with 4480 CUDA cores (128 more), will have 12GB GDDR6 running at 16Gbps on a 384bit memory bus, which would be 768GB/s (up from the 616GB/s on the current 2080 Ti)

— Charlie (@ghost_motley) May 16, 2019

That combined with a price drop, I'd get an RTX 2080 Ti.

My Thoughts on The Matter

Nvidia could totally release an updated SKU with simply just higher memory clock speeds due to better sourced RAM chips. It would be a valid update that wouldn’t really cost all that much to do. Adding cores to the chip seems a bit extreme. Honestly, I just think it is just them phasing out the A and non-A die thing from the initial launch with zero changes to the cards. Testing this theory does lend some credence to at least selling new variants with higher VRAM speed as you will see below.

Testing

Rig Specs

- Intel Core i7 8700k @ 5GHz Core 4.4GHz cache

- Gigabyte Aorus Z370 Gaming 7

- EVGA 2080 Ti XC Black

- 32GB Corsair DDR4-2800

- 480GB SanDisk Ultra SSD Boot Drive

- 960GB PNY CS1311 SSD Data Drive

- SeaSonic Prime Titanium 850W

Testing Software

I decided to go simple with just testing Battlefield V since that is the only title I own that uses ray tracing.

Testing Methodology

Using OCAT to capture the frame time data and EVGA Precision X1 to set my clock speeds and the fans to max I tested Battlefield V’s 4th chapter 1st scene from the point where you gain control and the HUD shows up until there is a big log in the path up the first hill. Getting a simple 1000MHz overclock on my VRAM is pretty much a given for my card, so I tested at stock and with the +1000. I tested 1080p with RT and 4k with DLSS+RT. I also tested DX12 without RT and DX11 without RT. The memory clock speed was the only thing that changed between the tests. Max power target and voltage were set for each test.

The results

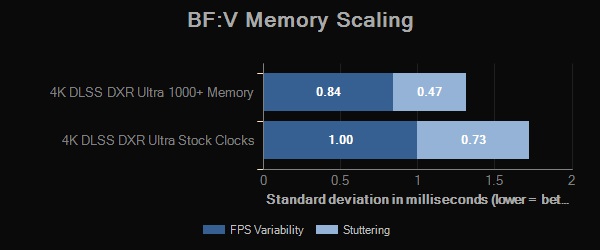

4K + DLSS+RT

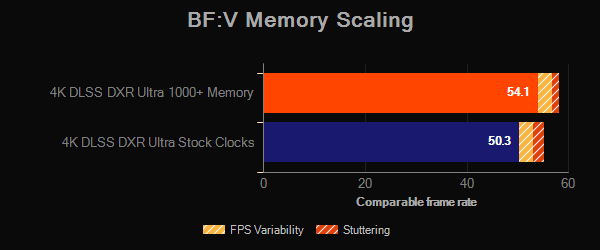

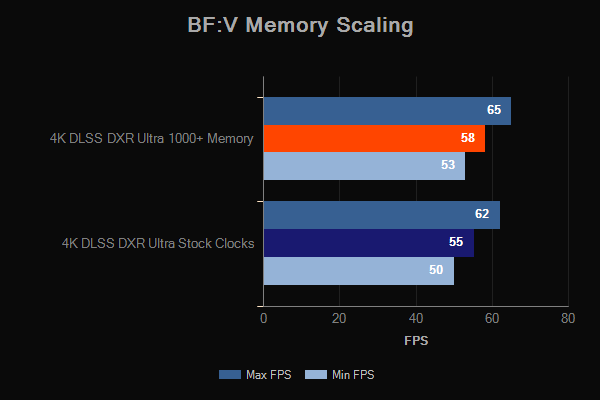

4K + DLSS+RT Comparable Frame Rate

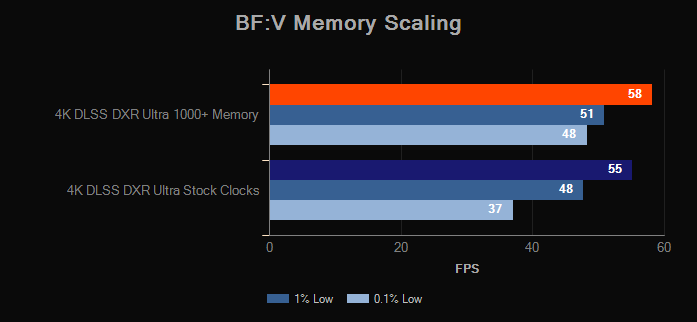

4K + DLSS+RT Percent Lows

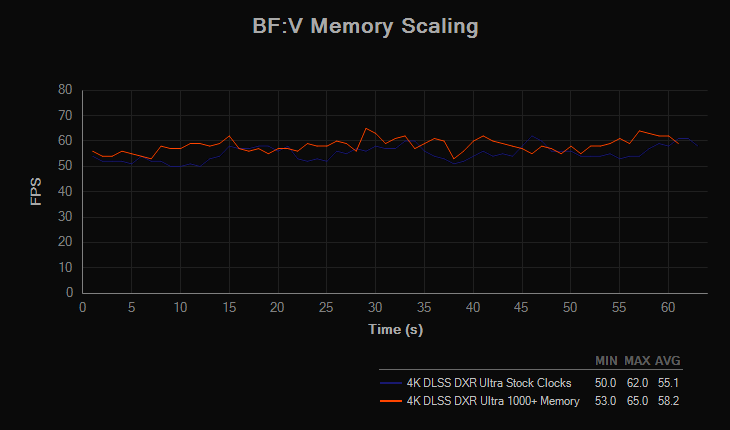

4K + DLSS+RT FPS Graph

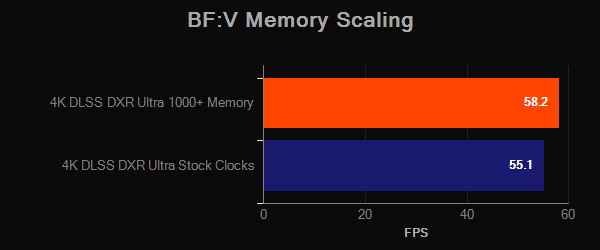

4K + DLSS+RT FPS Chart

4K + DLSS+RT FPS Min Max Avg

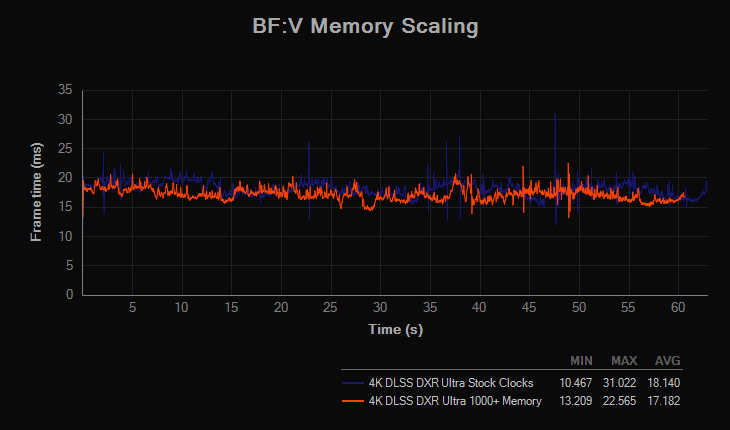

4K + DLSS+RT Frame Time Graph

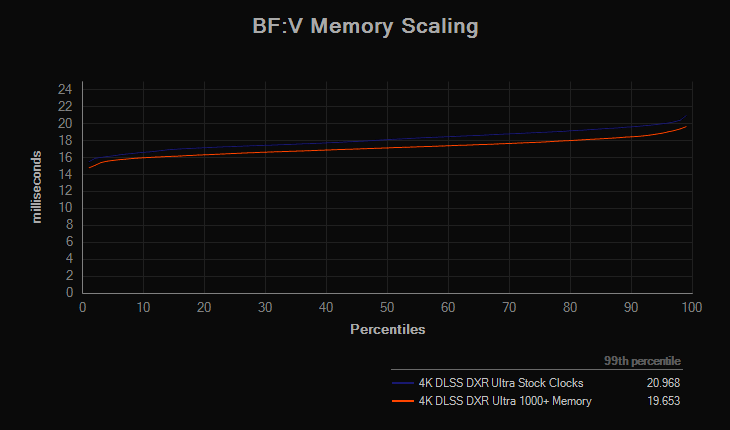

4K + DLSS+RT Percentile Graph

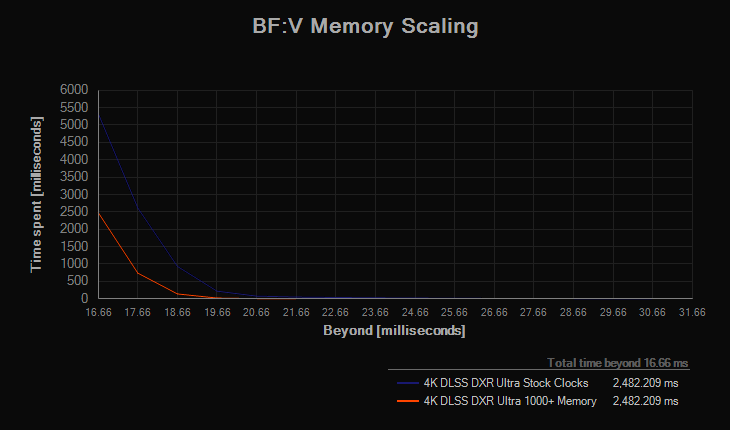

4K + DLSS+RT Time Spent Graph

4K + DLSS+RT Frame Stutter Chart

1080P + RT

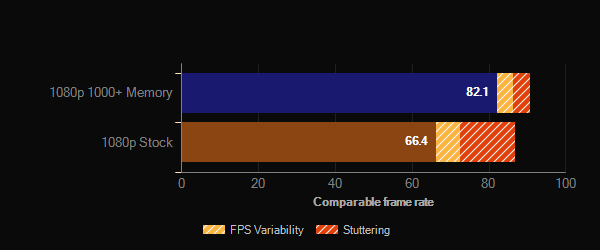

1080P + RT Comparable Frame Rate

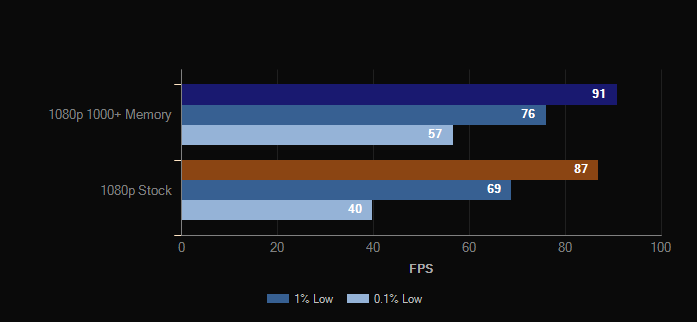

1080P + RT Percent Lows

1080P + RT FPS Graph

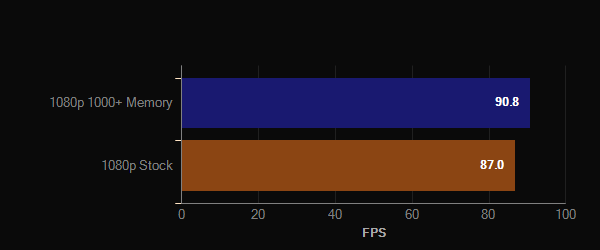

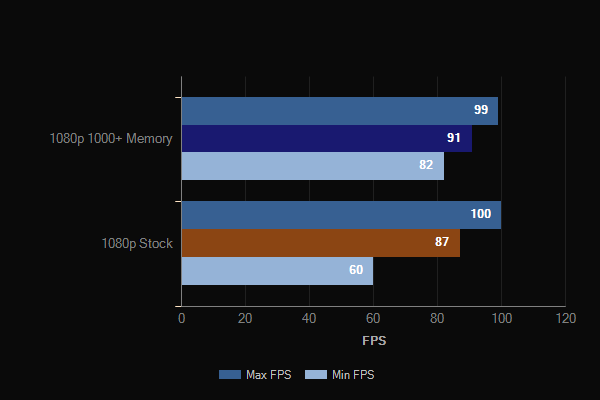

1080P + RT FPS Chart

1080P + RT FPS Min Max Avg

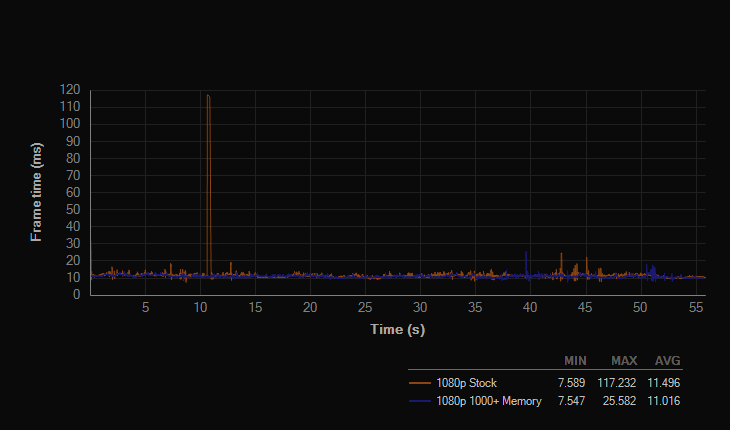

1080P + RT Frame Time Graph

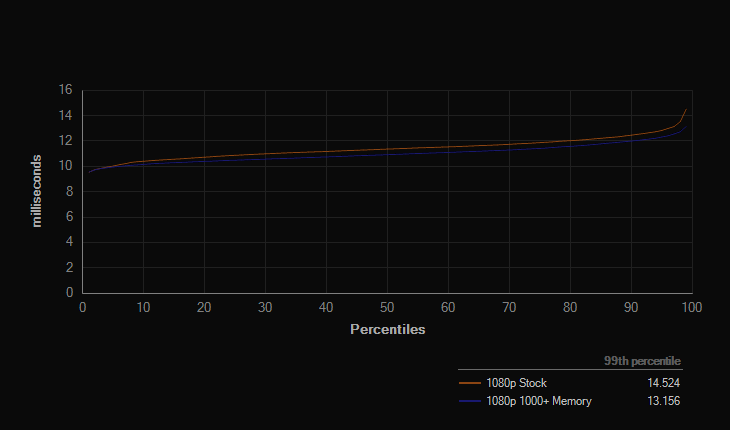

1080P + RT Percentile Graph

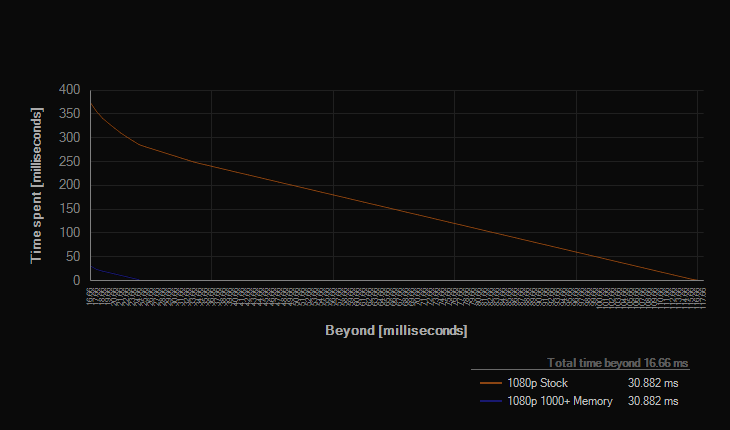

1080P + RT Time Spent Graph

1080P + RT Frame Stutter Chart

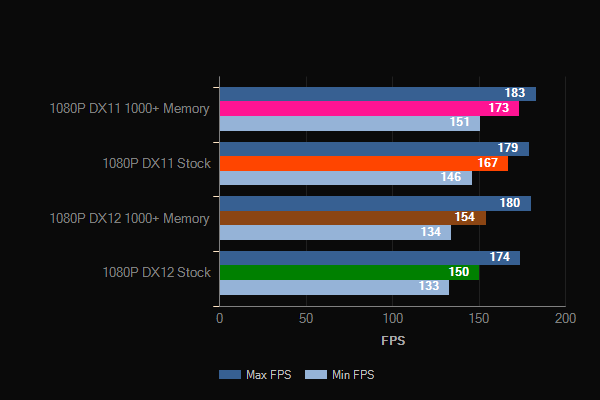

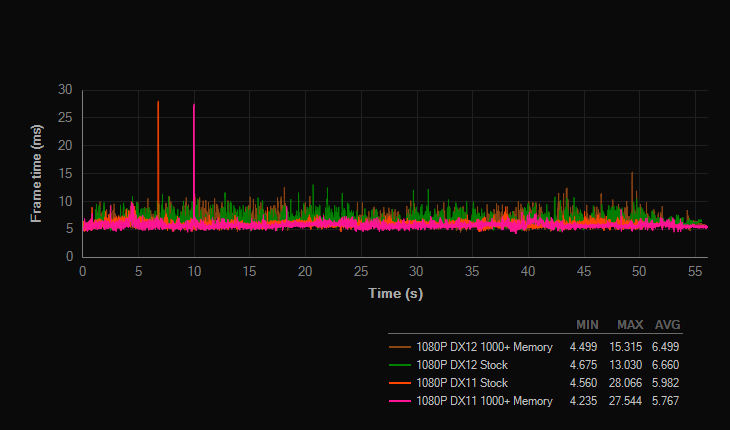

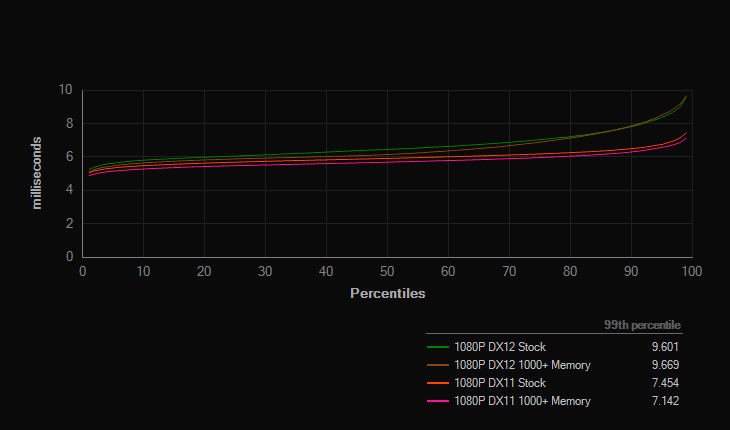

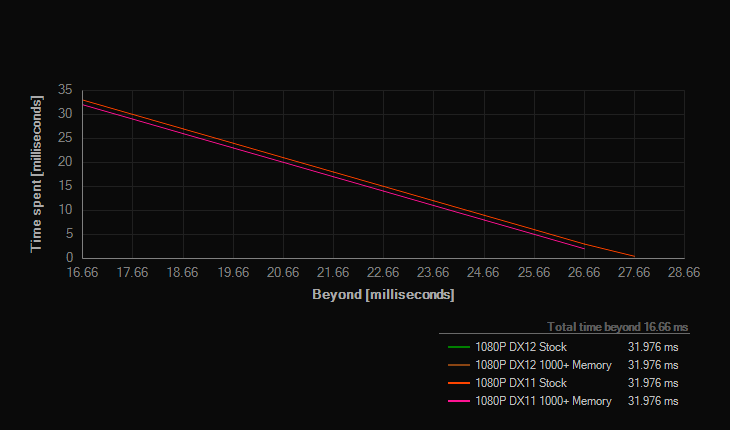

1080P + no RT (DX12 & DX11)

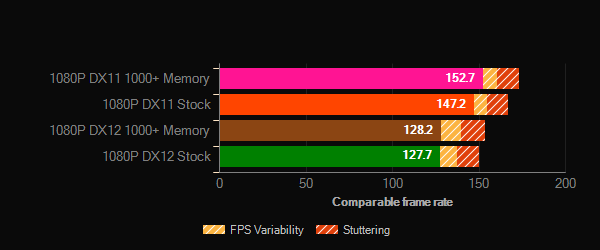

1080P + no RT (DX12 & DX11) Comparable Frame Rate

1080P + no RT (DX12 & DX11) Percent Lows

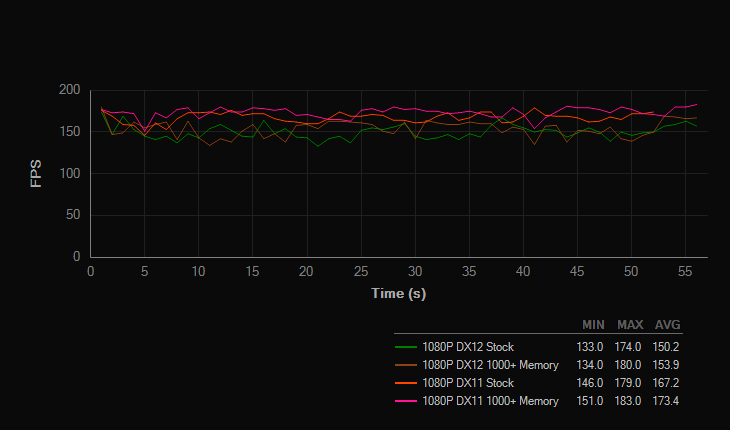

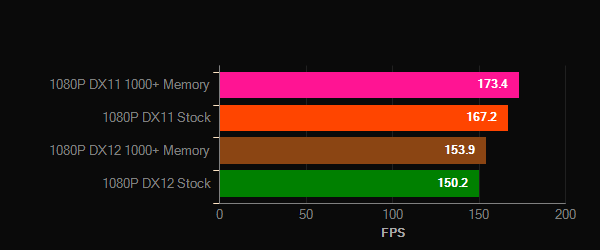

1080P + no RT (DX12 & DX11) FPS Graph

1080P + no RT (DX12 & DX11) FPS Chart

1080P + no RT (DX12 & DX11) FPS Min Max Avg

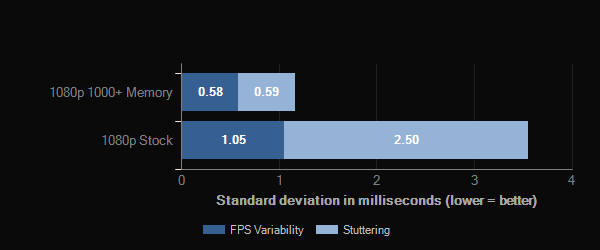

1080P + no RT (DX12 & DX11) Frame Time Graph

1080P + no RT (DX12 & DX11) Percentile Graph

1080P + no RT (DX12 & DX11) Time Spent Graph

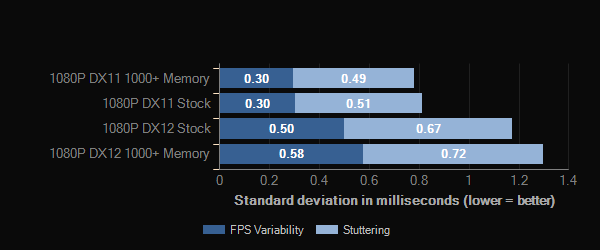

1080P + no RT (DX12 & DX11) Frame Stutter Chart

Thoughts

No matter what the task is, a simple VRAM overclock can work magic with keeping consistent frame times. If Nvidia would release a new tier of RTX cards with just faster VRAM it could end up being a higher performance class across the board. It could also lure in potential customers that were on the fence about upgrading but at the same time not really make current owners of RTX feel too bad about their current cards.